- Hardware and software environment of the HybriLIT platform

- Getting started: remote access to the platform

- SLURM task manager:

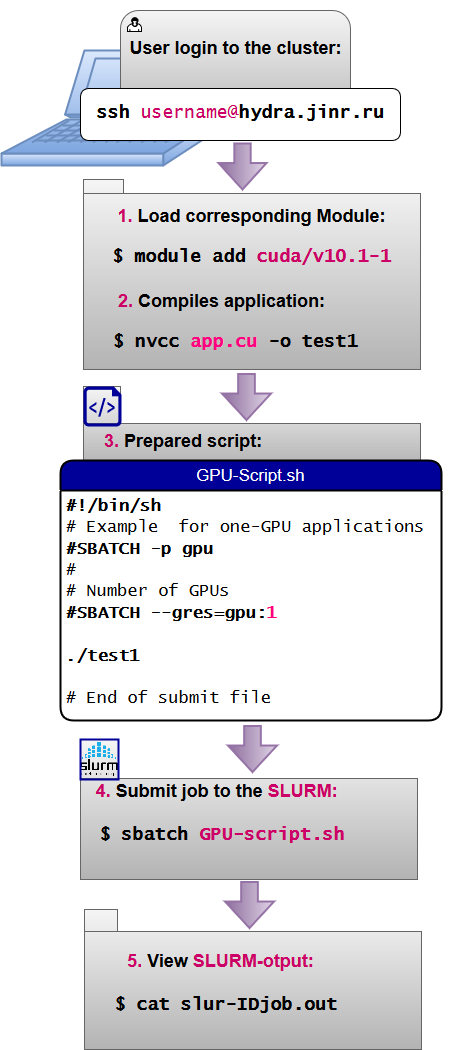

- 5basic steps to carry out computations on the platform

- Compilation and launch of OpenMP-applications

- Compilation and launch of MPI-applications

- Compilation and launch of CUDA-applications

- Compilation and launch of OpenMP+CUDA hybrid applications

- Compilation and launch MPI+CUDA hybrid applications

- Compilation and launch MPI+OpenMP hybrid applications

Tutorial Video “Lunch the Tasks”

Useful links:

Maxim Zuev, chief analyst, LIT JINR (in Russian)

Video by A.S. Vorontsov

Hardware and software environment of the HybriLIT platform

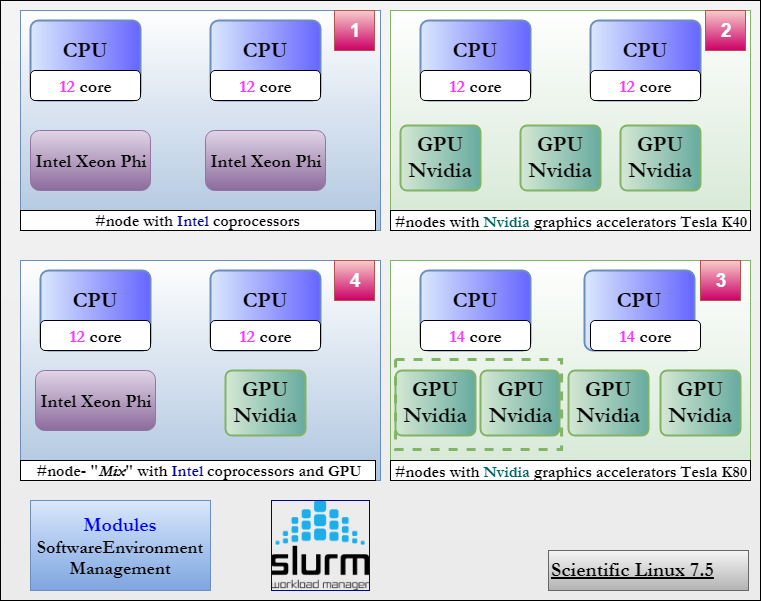

HybriLIT heterogeneous computing platform contains computation nodes with multi-core Intel processors, NVIDIA graphical processors and Intel Xeon Phi coprocessors (see more details in section “Hardware”).

Types of main computation nodes:

- Nodes with multi-core CPU and Intel Xeon Phi coprocessors.

- Nodes with multi-core CPU and 3 Nvidia Tesla K40 graphic accelerator (GPU).

- Nodes with multi-core CPU and 2 (4) Nvidia Teals K80 GPU.

- Mix-blade with multi-core CPU, Ontel Xeon Phi coprocessor and Nvidia Tesla K20 GPU.

Heterogeneous computing platform is under Scientific Linux 6.7 OS; it also includes SLURM task manager and some specific software installed – compilers and packages for development, debugging and profiling of parallel application (including Modules package).

Modules package

Modules 3.2.10 package for dynamic change of environment variables is installed on the platform. This package allows users change the list of compilers for development of applications with support of the basic programming languages (C/C++, FORTRAN, Java), parallel programming technologies (OpenMP, MPI, OpenCL, CUDA) and use program packages installed on the platform. Users need to load the required modules before application compilation.

Main commands for work with modules:

|

1 2 3 4 5 |

module avail - look through the list of active modules; module add [MODULE_NAME] - add a module to the list of loaded ones; module list - look thourhg the list of loaded modules; module rm [MODULE_NAME] - remove a module from the list of loaded ones; module show [MODULE_NAME] - description of changes made by a module. |

![]()

Loaded modules are not saved from session to session. If you need to use the same set of modules, please use the commands below:

|

1 |

module add [ИМЯ_МОДУЛЯ] в файл ~/.bashrc. |

You can also load compilers and packages installed in cvmfs (CernVM File System) system.

CernVM File System

CernVM-FS system is added to the list of installed program packages and allows users to have access to the software installed in CERN. List of available packages can be seen by means of the command below:

|

1 |

ls /cvmfs/sft.cern.ch/lcg/releases |

It should be noted that /cvmfs/sft.cern.ch/ directory is mounted dynamically at request to its content, however, after some time of inactivity it may disappear from the list of available directories. You can mount it again using the same command below:

|

1 |

ls /cvmfs/sft.cern.ch/lcg/releases |

In order to use comiplesr and program packages, it is necessary to execute the command below:

|

1 |

source [ПУТЬ_ДО_ФАЙЛА_С_ПЕРЕМЕННЫМИ_ОКРУЖЕНИЯ] |

Directories’ tree in cvmfs have a special structure. Let’s examine this in terms of ROOT package. The full path to the directory will look like this:

|

1 |

/cvmfs/sft.cern.ch/lcg/releases/ROOT/6.07.02-f644e/x86_64-slc6-gcc49-opt/ |

where /6.07.02-f644e – is a version of ROOT package; the nake of the directory/x86_64-slc6-gcc49-opt: x86_64 indicates support for 64-bit version of the package; slc6 – indicates that package compilation from the source code was carried out under Scientific Linux 6; gcc49 – the package was compiled using gcc 4.9.9.

Files with environment values may have one of two names:

- setup.sh

For example, command for use of gcc 4.9.3 compiler will look like the following:

|

1 |

source /cvmfs/sft.cern.ch/lcg/releases/gcc/4.9.3/x86_64-slc6/setup.sh |

- [PACKAGE_NAME]-env.sh

For example, for the ROOT 6.07.0 package, the command will look like this:

|

1 |

source /cvmfs/sft.cern.ch/lcg/releases/ROOT/6.07.02-f644e/x86_64-slc6-gcc49-opt/ROOT-env.sh |

Getting started: remote access to the cluster

Remote access to the HybriLIT heterogeneous cluster is available only via SSH.DNS protocol with the following address:

|

1 |

>hydra.jinr.ru |

Please see more detailed instruction for different OS below.

For users under OS Linux/ MAC OS X

Start the Terminal and type in the following:

|

1 |

$ ssh USERNAME@hydra.jinr.ru |

where USERNAME – is the login that you received after registration on the cluster and hydra.jinr.ru – is the server address.

When asked to enter the password, please enter the password for your account on the cluster.

Once authorized successfully on the cluster, you will see the following command line on the screen:

|

1 |

[USERNAME@hydra ~] $ |

![]()

This means you have connected to the cluster and are in your home directory.

At first attempt to access the cluster, you will be notified that IP-address you are trying to connect to is unknown. Please type in “yes” and press Enter. Once done, this address will be added to the list of known hosts.

In order to launch apps with GUI, please start the Terminal and enter:

|

1 |

$ ssh -X USERNAME@hydra.jinr.ru |

|

1 |

[USERNAME@hydra ~] $ ssh -X space31 |

For users under OS Windows

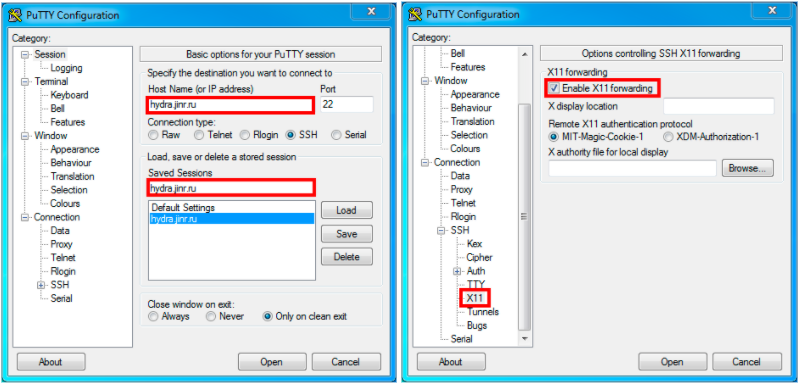

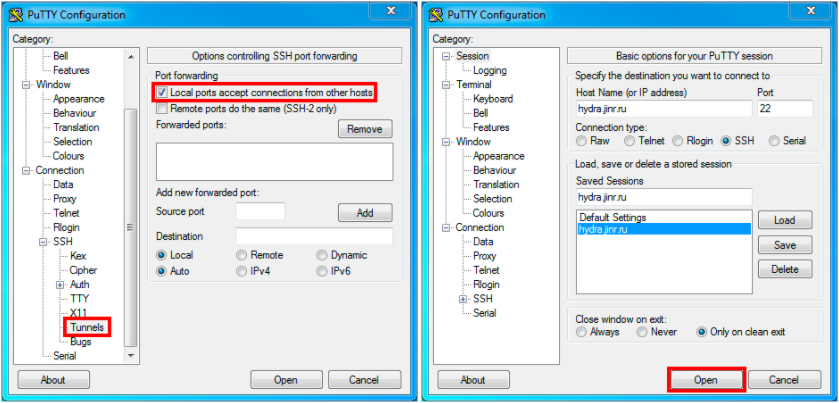

In order to connect to the cluster for users inder Windows, it is necessary to user a special peogramm – SSH-client, for example, PuTTY.

To install PuTTY on your computer, please download the putty.exe file at http://the.earth.li/~sgtatham/putty/latest/x86/putty.exe and run it.

Please see a step-by-step guide for setting PuTTY to get access to the cluster:

- In the filed Host Name (or IP address) enter the server address: hydra.jinr.ru

- In the filed Saved Sessions enter the connection name (e.g. hydra.jinr.ru).

- To connect a remote X11 graphic interface, please switch to the tab Connection > SSH > X11 and select the field “Enable X11 forwarding”

- Check if this field “Local ports accept connection from other hosts” is selected in the tab Connection > SSH > Tunnels

- Then switch back to the tab “Sessions” and press Save to save all changes.

- Press Open to connect to the HybriLIT platform and enter login/password that we sent you after registration.

Once authorized successfully on the platform, you will see the following command line on the screen:

|

1 |

[USERNAME@hydra ~] $ |

![]()

This means you have connected to the platform and are in your home directory.

At first attempt to access the platform, you will be notified that IP-address you are trying to connect to is unknown. Please type in “yes” and press Enter. Once done, this address will be added to the list of known hosts.![]() In order to launch apps with GUI, please enter:

In order to launch apps with GUI, please enter:

|

1 |

[USERNAME@hydra ~] $ ssh -X space31 |

SLURM task manager:

SLURM – is an open source, fault-tolerant, and highly scalable platform management and job scheduling system that provides three key functions:

- it allocates exclusive and/or non-exclusive access to resources (compute nodes) to users for some duration of time so they can perform work;

- it provides a framework for starting, executing, and monitoring work (normally a parallel job) on the set of allocated nodes

- it arbitrates contention for resources by managing a queue of pending work.

1. Main commands

Main commands of SLURM include: sbatch, scancel, sinfo, squeue, scontrol.

sbatch – is used to submit a job script for later execution. The script will typically contain one or more srun commands to launch parallel tasks.

Once being ecexuted, application recievies a jobid according to which it can be found in the list of launched applications (squeue). The results are available in the slurm-jobid.out file.

Example of using sbatch:

|

1 2 |

[user@hydra] sbatch runscript.sh Submitted batch job 141980 |

squeue – reports the state of jobs or job steps. It has a wide variety of filtering, sorting, and formatting options;

By default, it reports the running jobs in priority order and then the pending jobs in priority order. Launched application may have one of the folloing states:

RUNNING (R) – under execution;

PENDING (PD) – in queue;

COMPLETING (CG) – under termination. (in this case you may need help of the system administrator to remove the terminated application from the queue list.

Example of using squeue:

|

1 2 3 4 |

[user@hydra] squeue JOBID PARTITION NAME USER ST TIME NODES NODELIST 28727 cpu sb_12945 dmridu R 8-00:12:12 1 blade01 29512 gpu smd5a ygorshko R 2-04:13:11 1 blade04 |

sinfo – reports the state of partitions and nodes managed by Slurm. It has a wide variety of filtering, sorting, and formatting options.

Computation nodes may be in one of the following states:

idle – node is free;

alloc – m=node is being used by the processor;

mix – node is used partially;

down, drain, drug – node is blocked.

Example of using sinfo:

|

1 2 3 4 5 6 7 8 9 |

[user@hydra] sinfo PARTITION AVAIL TIMELIMIT NODES STATE NODELIST interactive* up 1:00:00 1 idle blade02 cpu up infinite 1 mix blade03 cpu up infinite 1 alloc blade01 gpu up infinite 1 mix blade04 gpu up infinite 3 idle blade[05-07] phi up infinite 1 mix blade03 gpuK80 up infinite 2 idle blade[08-09] |

scancel – is used to cancel a pending or running job or job step. It can also be used to send an arbitrary signal to all processes associated with a running job or job step.

Example of using scancel to cancel a pending application with jobid 141980:

|

1 |

[user@hydra] scancel 141980 |

scontrol – is the administrative tool used to view and/or modify Slurm state. Note that many scontrol commands can only be executed as user root.

Example of using scontrol to see specifications of the launched application:

|

1 2 3 4 5 |

[user@hydra] scontrol show job 141980 JobId=141980 JobName=test UserId=user(11111) GroupId=hybrilit(10001) JobState=RUNNING Reason=None Dependency=(null) … |

List of specifications contains such parameters as:

| Parameter | Function |

|---|---|

| UserId | usename |

| JobState | Application state |

| RunTime | Computation time |

| Partition | Partition used |

| NodeList | Nodes used |

| NumNodes | Number of used nodes |

| NumCPUs | Number of used processor cores |

| Gres | Number of used graphic accelerators and coprocessors |

| MinMemoryCPU | Amount of used RAM |

| Command | Location of the file for launching application |

| StdErr | Location of the file with error logs |

| StdOut | Location of the file with output data |

Example of using scontrol to see nodes’ specifications:

|

1 2 |

[user@hydra] scontrol show nodes [user@hydra] scontrol show node blade01 |

List of specificatons contains such parameters as:

NodeName – hostname of a computation node;

CPUAlloc – number of loaded computation cores;

CPUTot – total number of computation cores per node;

CPULoad – loading of computation cores;

Gres – number of graphic accelerators and coprocessors available for computations;

RealMemory – total amount of RAM per node;

AllocMem – amount of loaded RAM;

State – node state.

2. Partitions

The process of launching of a job begins with its pending in one of the partitions. Due to the fact that HybriLIT is a heterogeneous platform, different partitions for using different kind of resources were created.

Currently HybriLIT includes 6 partitions:

-

- interactive* – includes 1 computation node with 2 Intel Xeon E5-2695 v2 12-cores, 1 NVIDIA Tesla K20X, 1 Intel Xeon Coprocessor 5110P (* – denotes the default partition). This partition is good for running test programs. Computation time for this partitions is limited and makes 1 hour;

- cpu – includes 4 computation nodes with 2 Intel Xeon E5-2695 v2 12-cores per each. This partition will be good for running applications that use central processing units (CPU) for computations;

- gpu – includes 3 computation nodes with 3 NVIDIA Tesla K40 (Atlas) per each. This partition will be good for running applications that use graphic accelerators (GPU) for computations;

- gpuK80 – includes 3 computation nodes with 2 NVIDIA Tesla K80 per each. This partition will be good for running applications that use GPU for computations;

- phi – includes 1 computation node with 2 Intel Xeon Coprocessor 7120P. This partition will be good for running applications that use coprocessors for computations;

- long – includes 1 computation node with NVIDIA Tesla. This partition will be good for running applications that require time-consuming computations (up to 14 days).

3. Description and examples of script-files

In order to run applications by means of sbatch command, you need to use a script-file. Normally, script-file is a common bash file the meets the following rewuirements:![]()

The first line includes

#!/bin/sh (или #!/bin/bash) , which allows the script being run as a bash-script;

Lines beginning with

# – are comments;

Lines beginning with

#SBATCH , set parameters for SLURM job manager;

All parameters of SLURM are to be set BEFORE launching application;

Script-file includes a command for launching applications.

SLURM has a big number of various parameters (https://computing.llnl.gov/linux/slurm/sbatch.html). Please see the required and recommended parameters for work on HybriLIT below:

-p – partitions used. In case of absence of this parameter, your job will be sent to the interactive partition the time of execution of which is limited and makes 1 hour. Depending on the type of used resources, application may be executed in one of available partitions: cpu, phi, gpu, gpuK80;

-n – number of processors used;

-t – allocated computation time. This is a necessary parameter to be set. The following parameter formats are available: minutes, minutes:seconds, hours:minutes:seconds, days-hours, days-hours:minutes, days-hours:minutes:seconds;

--gres – number of allocated NVIDIA graphic accelerators and Intel Xeon Phi coprocessors. This parameter is necessary if your application uses gpu OR Intel Xeon Phi coprocessors;

--mem – allocated RAM (in Mbytes). This parameter is not necessary to be set, however, consider setting it if your application uses a large amount of RAM;

-N – number of nodes used. This parameter should be set ONLY in case if your application needs more resources than 1 node possesses;

-o – name of the output file. By default, all results are written in slurm-jobid.out file.

Please see below examples of scripts that use various resources of the HybriLIT platform:

For computations using CPU:

|

1 2 3 4 |

#!/bin/sh #SBATCH -p cpu #SBATCH -t 60 ./a.out |

For computations using GPU:

|

1 2 3 4 5 |

#!/bin/sh #SBATCH -p gpu #SBATCH -t 60 #SBATCH -gres=gpu:2 ./a.out |

For computations using Intel Xeon Coprocessor:

|

1 2 3 4 5 |

#!/bin/sh #SBATCH -p phi #SBATCH -t 60 #SBATCH -gres=phi:2 ./a.out |

Examples of using script-files for different programming technologies will be given below in the corresponding sections.

5 basic steps to carry out computations on the platform:

-

-

- We can point out 5 basic steps that describe the workflow on the platform:

-

Compilation and launch of OpenMP-applications

MANUAL “Fundamentals of OpenMP technology on the HybriLIT cluster” (in Russian).

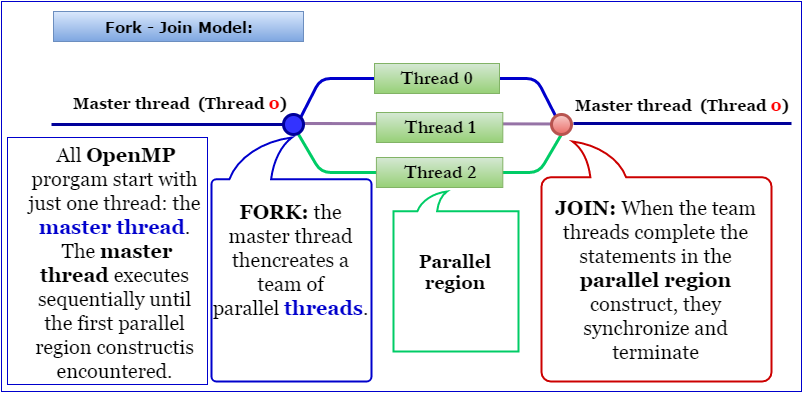

OpenMP(Open Multi-Processing) — is an application programming interface (API) that supports multi-platform shared memory multiprocessing programming in С, С++ and Fortran. It consists of a set of compiler directives, library routines, and environment variables that influence run-time behavior that are meant for development of multi-thread application on multi-processor systems with shared memory. Program model – Fork-Join Model, – the main aim of which is (Pic.1.):

Any program starts in Thread 0 (Master thread). Then by means of compiler directives, Thread 0 creates a set of other threads – FORK, which are executed in parallel. Once created threads terminate their work in the parallel region, all threads then synchronize – JOIN and the program continues its work in the master thread.

Pic.1.Fork-Join program model.

Compilation

A standard set of compilers with support for OpenMP is being used. GNU and Intel compilers are available. GNU compilers with support for OpenMP: 4.8.5 (by default), 4.9.3-1, 6.2.0-2, 7.2.0-1, 8.2.0-1 or 9.1.0-1 are available. Before compilation using Intel compilers, it is necessary to load the corresponding modules:

|

1 |

$ module add intel-2016.1.150 |

Please see below basic commands for compilation of programs written in С, С++ or Fortran for different compilers:

| Intel | GNU | PGI | |

|---|---|---|---|

| C | icc -openmp hello.c | gcc -fopenmp hello.c | pgcc -mp hello.c |

| C++ | icpc -openmp hello.cpp | g++ -fopenmp hello.cpp | pgc++ -mp hello.cpp |

| Fortran | ifort -openmp hello.f | gfortran -fopenmp hello.f | pgfortran -mp hello.f |

In case of successful compilation, a binary executable file is created. By default, name of the binary file for all compilers is - a.out. You can set another name for that file using -o. For example, if we use the following command:

|

1 |

$ icc -fopenmp hello.c -o hello |

the name of the binary file will be hello.

Launch

Launching of OpenMP-applications is carried out by means of script-file that contains the following data:

|

1 2 3 4 5 |

#!/bin/sh # used shell #SBATCH -p cpu # selecting the required partition #SBATCH -c 5 # setting the number of computation threads #SBATCH -t 60 # setting computation time ./test # launch of application |

The use of the following settings optimizes distribution of threads by computation cores and, as a rule, provides less computation time than that without using this command.

|

1 |

$ export OMP_PLACES=cores |

Number of OMP threads may be set using environment variable OMP_NUM_THREADS before executing the program in the command line:

|

1 |

$ export OMP_NUM_THREADS=threads |

where threads – number of OMP-threads.

Thus, the recommended script-file for OpenMP-applications with, for example, 5 threads looks like this:

|

1 2 3 4 5 6 7 |

#!/bin/sh #SBATCH -p cpu #SBATCH -c 5 #SBATCH -t 60 export OMP_NUM_THREADS=5 export OMP_PLACES=cores ./a.out |

Use the following command to launch:

|

1 |

$ sbatch omp_script |

Compilation and launch of MPI-applications

Message Passing Interface (MPI) — is a standardized and portable message-passing system for passing information from process to process that execute one job.

GNU and Intel compilers are available for work with MPI.

GNU compiler

MPI-programs can be compiled using GNU-compilers with OpenMPIlibrary. GNU-compilers are installed on HybriLIT by default. In order to get access to OpenMPI-libraries, it is necessary to add the required modules 1.8.8, 2.1.2, 3.1.2 or 3.1.3:

|

1 |

$ module add hlit/openmpi/1.8.8 |

|

1 |

$ module add hlit/openmpi/2.1.2 |

|

1 |

$ module add hlit/openmpi/3.1.2 |

or

|

1 |

$ module add hlit/openmpi/3.1.3 |

Compilation

Please see below basic commands for compilation of programs written in С, С++ or Fortran for GNU-compiler:

| Programming languages | Compilation commands |

|---|---|

| C | mpicc |

| C++ | mpiCC / mpic++ / mpicxx |

| Fortran 77 | mpif77 / mpifort (*) |

| Fortran 90 | mpif90 / mpifort (*) |

![]() (*) It is recommended to use mpifort command instead of mpif77 or mpif90, as they are considered to be out-of-date. By means of mpifort it is possible to compile any Fortran-Fortran-programs that use “

mpif.h ” or “

use mpi” as an interface.

(*) It is recommended to use mpifort command instead of mpif77 or mpif90, as they are considered to be out-of-date. By means of mpifort it is possible to compile any Fortran-Fortran-programs that use “

mpif.h ” or “

use mpi” as an interface.

Example of program compilation in С programming language:

|

1 |

$ mpicc example.c |

If you do not set a custom name to the binary executable file, the name after successful compilation will be a.out by default.

To start the program using OpenMPI modules use this script:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

#!/bin/sh #Example script for OpenMPI: #Choosing queue to which the task will be sent #SBATCH -p cpu # #Running MPI tasks on 2 nodes, 3 MPI-process per node #SBATCH --nodes=2 # Number of nodes #SBATCH --ntasks-per-node=3 # the number of MPI-processes on the node # #Setting the time of the program in the format: #minutes #minutes:seconds #days-hours #days-hours:minutes:seconds #SBATCH -t 60 # implementation of the program in minutes # #program launch mpirun ./a.out # #end of the script |

Intel-compiler

In order to use MPI with Intel-compiler, it is necessary to load a module

|

1 |

$ module add intel/v2018.1.163-9 |

This module includes MPI-library.

Compilation

Please see below basic commands for compilation of programs written in С, С++ or Fortran for Intel-compiler:

| Programming languages | Compilation commands |

|---|---|

| C | mpiicc |

| C++ | mpiicpc |

| Fortran | mpiifort |

Example of program compilation in Frotran:

|

1 |

$ mpiifort example.f |

![]() Options of compilation optimization:

Options of compilation optimization:

| Options | Purpose |

|---|---|

| -O0 | Without optimization; used for GNU-compiler by default |

| -O2 | Used for Intel-compiler by default |

| -O3 | May be efficient for a particular range of programs |

|

-march=native;

-march=core2 |

Adjustment for processor architecture (using optional capability of Intel processors) |

Initiation of tasks

In order to initiate a task, use the following command of SLURM:

|

1 |

$ sbatch script_mpi |

where script_mpi – is the name of previously prepared script-file that contains task parameters.

Example of script-file for launching MPI-applications on two computation nodes

The following two approaches describe distribution of MPI processes over computational nodes.

Example of using combination of keys --tasks-per-node and -n : 10 processes ( -n 10 ) 5 processes per one computational node ( --tasks-per-node=5). Thus, computations are distributed over 2computation nodes:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 |

#!/bin/sh #setting input queue of tasks for MPI-program: #SBATCH -p cpu #providing required number of processors (cores), #equal to the number of parallel processes: #SBATCH -n 10 #setting number of processes per node: #SBATCH --tasks-per-node=5 #setting time limits for performing computations: #The following formats are available: minutes, minutes:seconds, days-hours, days-hours:minutes:seconds; #SBATCH -t 60 #setting the file name for tasks listing: #SBATCH -o output.txt #launching of tasks: mpirun ./a.out |

Example of using combination of keys --tasks-per-node and -N: 10 processes ( -n 10 ) 5 processes per one computational node ( --tasks-per-node=5) and number of nodes ( -N 2). Thus, 10 processes are distributed over 2 computation nodes:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

#!/bin/sh # setting input queue of tasks for MPI-program: #SBATCH -p cpu # providing required number of processors (nodes), # equal to the number of parallel processes: #SBATCH --tasks-per-node=5 #launching processes on 2 computation nodes (**): #SBATCH -N 2 #setting time limits for performing computations: #The following formats are available: minutes, minutes:seconds, hours:minutes:seconds, days-hours, days-hours:minutes, days-hours:minutes:seconds; #SBATCH -t 60 #setting name for the output file: #SBATCH -o output.txt #launching of tasks: mpirun ./a.out |

![]() Additional nodes (**) can be ordered if the task requires more than 24 parallel processes.

Additional nodes (**) can be ordered if the task requires more than 24 parallel processes.

Example of a simple script-file in which the most important SLURM-directives are present:

|

1 2 3 4 5 |

#!/bin/sh #SBATCH -p cpu #SBATCH -n 7 #SBATCH -t 60 mpirun ./a.out |

Executable filea.out (prepared by the compiler) is sent to the input queue of tasks aimed at computations on cpu-subset of the HybriLIT platform. The tasks requires 7 cores. The task will be given a unique id in the input queue – let it be 1234. Then the task listing will be presented as slurm-1234.out in the same directory which contains its executable file a.out.

![]()

In order to call MPI-procedures in programs in Fortran, Include ‘mpif.h’ operator is used. This operator can be substituted with a more powerful option – mpi.mod module that is loaded by means of Use mpi command.

Launching tasks

-

- While launching tasks, it is necessary to take into consideration limitations on using the resources of the platform;

- it is advisable to use the module for setting environmental variable with which the program has been compiled;

- It is important to not delete executable file and change input data unless the task is complete.

Compilation and launch of CUDA-applications

CUDA (Compute Unified Device Architecture) is a hardware and software parallel computing architecture that enables to significantly enhance computing performance through the use of NVIDIA graphics processor units (GPUs).

Available CUDA versions

The most efficient technology enabling the use of NVIDIA GPUs is the parallel computing platform, Compute Unified Device Architecture (CUDA), which provides a set of extensions for the C/C++, Fortran languages.

Two CUDA-enabled compilers are available for developing parallel applications (debugging, profiling and compiling) using NVIDIA Tesla GPUs on the HybriLIT platform:

- CUDA implementation for the C/C++ language from NVIDIA on top of the Open64 open-source compiler: nvcc compiler.

- CUDA implementation for Fortran with a closed license from Portland Group Inc. (PGI): pgfortran

Compiling CUDA C/C++ applications

The following CUDA versions, which are connected through the corresponding modules of the MODULES package, are available on the platform:

| CUDA version | The corresponding modules |

|---|---|

| 8.0 | $ module add cuda/v8.0-1 |

| 9.2 | $ module add cuda/v9.2 |

| 10.0 | $ module add cuda/v10.0-1 |

| 10.1 | $ module add cuda/v10.1-1 |

| 11.4 | $ module add cuda/v11.4 |

Three representatives of NVIDIA Tesla graphics accelerators, namely, Tesla K20X, Tesla K40, Tesla K80, are available for computations using GPUs. According to the architecture naming system adopted by NVIDIA, GPUs are named as sm_xy, where x denotes the GPU generation number, y is the generation version. Tesla K20 and Tesla K40 GPUs have the sm_35 architecture, while Tesla K80 GPUs have the sm_37 architecture.

CUDA 8.0, 9.2, 10.0, 10.1, 11.4, 12.1

For the compilation of CUDA applications that supports all architectures available on the platform, one can use a single compilation command (starting from CUDA 8.0):

|

1 |

$ nvcc app.cu --gpu-architecture=compute_35 --gpu-code=sm_35,sm_37 |

Compiling applications using CUDA libraries

To perform computations on graphics accelerators, the CUDA hardware and software platform provides a number of well-optimized mathematical libraries that do not entail special installation. For example:

- cuBLAS library for matrix-vector operations, an implementation of the BLAS (Basic Linear Algebra Subprograms) library;

- CUFFT library, an implementation of the fast Fourier transform (FFT), which comprises two separate libraries: CUFFT and cuFFTW;

- cuRAND library, which provides tools for efficiently generating high-quality pseudo-random and quasi-random numbers;

- cuSPARSE library, designed for operations with sparse matrices, for example, for solving systems of linear algebraic equations with a matrix of a system that has a band structure.

More information is available at http://docs.nvidia.com/cuda/

![]()

An example of compiling applications with functions from the cuBLAS and cuFFT libraries is given below:

|

1 |

$ nvcc app.cu -lcublas -lcufft --gpu-architecture=compute_35 --gpu-code=sm_35 |

As seen from this compilation line, one only needs to add the -lcublas -lcufft keys.

Running GPU applications on the platform in the SLURM system

To run applications that use graphics accelerators in the SLURM system in batch mode, it is required to specify the following obligatory parameters/options in the script file:

- name of the corresponding queue, depending on the GPU type (see Section 1.3.2). For example, to use NVIDIA Tesla K80 graphics accelerators in computations, one needs to add the following line to the script file:

|

1 |

#SBATCH -p gpuK80 |

- number of required GPUs, which is specified by the –gres (Generic consumable RESources) option in the form:

--gres (Generic сonsumable RESources) в виде:

|

1 |

--gres=gpu <кол-во GPU на одном узле> |

For instance, to use three GPUs on one node, one needs to add the following line to the script file:

|

1 |

#SBATCH --gres=gpu:3 |

To use more GPUs in computations than on one node, one needs to add two lines to the script file:

|

1 2 |

#SBATCH --nodes=2 #SBATCH --gres=gpu:3 |

In this case, two compute nodes with three GPUs on each, for a total of six GPUs, will be used.

![]()

Features of computations using the NVIDIA K80 accelerator.

The Tesla K80 graphics accelerator is a dual-processor device (two GPUs in one device) and has nearly twice the performance and twice the memory bandwidth of its predecessor, Tesla K40.

To use two processors of theTesla K80 accelerator, it is required to specify the --gres=gpu:2 parameter in the script file.

Applications that use a single GPU

Common script files for running applications that use theNVIDIA TeslaK40 graphics accelerator are given below:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

#!/bin/sh # Example for one-GPU applications # # Set the partition where the job will run: #SBATCH -p gpu # #Set the number of GPUs per node #SBATCH --gres=gpu:1 # # Set time of work: Avaliable following formats: minutes, minutes:seconds, hours:minutes:seconds, days-hours, days-hours:minutes, days-hours:minutes:seconds; #SBATCH -t 60 # #Submit a job for execution: ./testK40 # # End of submit file |

and one processor of the Tesla K80 Tesla K80 accelerator in the SLURM: system in batch mode:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

#!/bin/sh # Example for one-GPU applications # # Set the partition where the job will run: #SBATCH -p gpuK80 # #Set the number of GPUs per node #SBATCH --gres=gpu:1 # # Set time of work: Avaliable following formats: minutes, minutes:seconds, hours:minutes:seconds, days-hours, days-hours:minutes, days-hours:minutes:seconds; #SBATCH -t 60 # #Submit a job for execution: ./testK80 # # End of submit file |

To use several GPUs in computations, a mechanism to distribute tasks between different devices, including those located on different platform nodes, is needed, i.e., the following cases are possible:

- several GPUs on one compute node;

- several compute nodes with GPUs.

To efficiently use several GPUs, hybrid applications such as OpenMP+CUDA, MPI+CUDA, MPI+OpenMP+CUDA, etc. are developed. More information can be found in the GitLab project “Parallel features”: https://gitlab-hybrilit.jinr.ru/.

Compilation and launch of OpenMP+CUDA hybrid applications

This section provides an example of running an application that uses multiple GPUs (multi-GPU application) on one node. For example, such an application can be written using two parallel programming technologies, OpenMP+CUDA, where each thread (OpenMP thread) is related to a single GPU.

When using NVIDIA Tesla K40 accelerators in computing:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 |

#!/bin/sh # Example for multi-GPU applications, # job running on a single node # # Set the partition where the job will run: #SBATCH -p gpuK80 # #Set the number of GPUs per node # (maximum 4 - K80=2 x K40): #SBATCH --gres=gpu:4 # #Set number of cores per task #SBATCH -c 4 # # Set time of work: Avaliable following formats: minutes, minutes:seconds, hours:minutes:seconds, days-hours, days-hours:minutes, days-hours:minutes:seconds; #SBATCH -t 60 # #Set OMP_NUM_THREADS to the same # value as -c if [ -n "$SLURM_CPUS_PER_TASK" ]; then omp_threads=$SLURM_CPUS_PER_TASK else omp_threads=1 fi export OMP_NUM_THREADS=$omp_threads # #Submit a job for execution: srun ./ testK80 # # End of submit file |

When using NVIDIA Tesla K80 accelerators in computing:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 |

#!/bin/sh # Example for multi-GPU applications, # job running on a single node # # Set the partition where the job will run: #SBATCH -p gpuK80 # #Set the number of GPUs per node # (maximum 4 - K80=2 x K40): #SBATCH --gres=gpu:4 # #Set number of cores per task #SBATCH -c 4 # # Set time of work: Avaliable following formats: minutes, minutes:seconds, hours:minutes:seconds, days-hours, days-hours:minutes, days-hours:minutes:seconds; #SBATCH -t 60 # #Set OMP_NUM_THREADS to the same # value as -c if [ -n "$SLURM_CPUS_PER_TASK" ]; then omp_threads=$SLURM_CPUS_PER_TASK else omp_threads=1 fi export OMP_NUM_THREADS=$omp_threads # #Submit a job for execution: srun ./ testK80 # # End of submit file |

Compilation and launch MPI+CUDA hybrid applications

This section provides an example of running an application that uses multiple GPUs (multi-GPU application) on several compute nodes. For example, such an application can be written using two parallel programming technologies, MPI+CUDA, where each process is related to a single GPU, and the number of processes per node is specified.

When using NVIDIA Tesla K40 accelerators in computing:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 |

#!/bin/sh # Example for GPU applications # # Set the partition where the job will run: #SBATCH -p gpu # # Set the number of nodes (maximum 4): #SBATCH --nodes=2 # #Specifies the number of GPU per node # (maximum 3 ): #SBATCH --gres=gpu:3 # # Set number of MPI tasks #SBATCH -n 6 # # Set time of work: Avaliable following formats: minutes, minutes:seconds, hours:minutes:seconds, days-hours, days-hours:minutes, days-hours:minutes:seconds; #SBATCH -t 60 # #Submit a job for execution: mpirun ./testK40mpiCuda # # End of submit file |

When using NVIDIA Tesla K80 accelerators in computing:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 |

#!/bin/sh # Example for GPU applications # # Set the partition where the job will run: #SBATCH -p gpuK80 # # Set the number of nodes (maximum 2): #SBATCH --nodes=2 # #Specifies the number of GPU per node # (maximum 4 - K80=2 x K40): #SBATCH --gres=gpu:4 # # Set number of MPI tasks #SBATCH -n 8 # # Set time of work: Avaliable following formats: minutes, minutes:seconds, hours:minutes:seconds, days-hours, days-hours:minutes, days-hours:minutes:seconds; #SBATCH -t 60 # #Submit a job for execution: mpirun ./testK80mpiCuda # # End of submit file |

Compilation and launch MPI+OpenMP hybrid applications

Perhaps the most efficient means of developing hybrid algorithms is a combination of MPI and OpenMP technologies. Let us look at an example of compiling and running an MPI+OpenMP program on the SkyLake and KNL architectures.

To do this, add an mpi module:

|

1 |

$ module add openmpi/v*.* |

Or

|

1 |

$ module add intel/v*.* |

where *.* is the version number.

For compilation, one can use mpic++ compilers:

|

1 |

$ mpic++ HelloWorld_MpiOmp.cpp -fopenmp |

or mpiicpc:

|

1 |

$ mpiicpc HelloWorld_MpiOmp.cpp -fopenmp |

To run an MPI+OpenMP application on the SkyLake architecture, it is needed to use the following script:

|

1 2 3 4 5 6 7 |

#!/bin/sh #SBATCH -p skylake # Partition name #SBATCH --nodes=2 # Number of nodes #SBATCH --ntasks-per-node=2 # Number of tasks per node #SBATCH --cpus-per-task=2 # Number of OpenMP threads for each MPI process export OMP_NUM_THREADS=$SLURM_CPUS_PER_TASK mpirun ./a.out |

This script requests that the program be run on two nodes with two MPI processes each and two OpenMP threads for each MPI process. After running the application

|

1 |

$ sbatch script_sl.sh |

we will receive the following messages in the output file:

|

1 2 3 4 5 6 7 8 |

Hello from thread 0 out of 2 from process 0 out of 4 on SkyLake (node n02p001.gvr.local) Hello from thread 1 out of 2 from process 0 out of 4 on SkyLake (node n02p001.gvr.local) Hello from thread 0 out of 2 from process 1 out of 4 on SkyLake (node n02p001.gvr.local) Hello from thread 1 out of 2 from process 1 out of 4 on SkyLake (node n02p001.gvr.local) Hello from thread 0 out of 2 from process 2 out of 4 on SkyLake (node n02p002.gvr.local) Hello from thread 1 out of 2 from process 2 out of 4 on SkyLake (node n02p002.gvr.local) Hello from thread 0 out of 2 from process 3 out of 4 on SkyLake (node n02p002.gvr.local) Hello from thread 1 out of 2 from process 3 out of 4 on SkyLake (node n02p002.gvr.local) |

One can see how the OpenMP threads greet the world, present themselves with their identification number inside the MPI process and report on which node they were born.

Let us run the application on the KNL architecture with the following script:

|

1 2 3 4 5 6 7 |

#!/bin/sh #SBATCH -p knl # Partition name #SBATCH --nodes=1 # Number of nodes #SBATCH --ntasks-per-node=2 # Number of tasks per node #SBATCH --cpus-per-task=3 # Number of OpenMP threads for each MPI process export OMP_NUM_THREADS=$SLURM_CPUS_PER_TASK mpirun ./a.out |

The SLURM script requests that the KNL queue be run on one node with two MPI processes and three OpenMP threads for each MPI process.

After running the application

|

1 |

$ sbatch script_knl.sh |

we will see the following message in the output file:

|

1 2 3 4 5 6 |

Hello from thread 1 out of 3 from process 0 out of 2 on KNL (node n01p005.gvr.local) Hello from thread 0 out of 3 from process 0 out of 2 on KNL (node n01p005.gvr.local) Hello from thread 2 out of 3 from process 0 out of 2 on KNL (node n01p005.gvr.local) Hello from thread 0 out of 3 from process 1 out of 2 on KNL (node n01p005.gvr.local) Hello from thread 1 out of 3 from process 1 out of 2 on KNL (node n01p005.gvr.local) Hello from thread 2 out of 3 from process 1 out of 2 on KNL (node n01p005.gvr.local) |

One can see how the OpenMP threads greet the world, note that they were all running on one node.

The program is available for downloading at gitlab-hlit.jinr.ru/ayriyan/mpiomp.