D. Belyakov¹, D. Dereza², G. Karpov¹, M. Lebedev², M. Skazkin², M. Zuev²

¹ Joint Institute for Nuclear Research, Dubna, Russia

² Far Eastern Federal University, Vladivostok, Russia

On the HybriLIT heterogeneous platform several monitoring systems are used that allow monitoring the operation of platform components and monitoring the load of computing resources in particular.

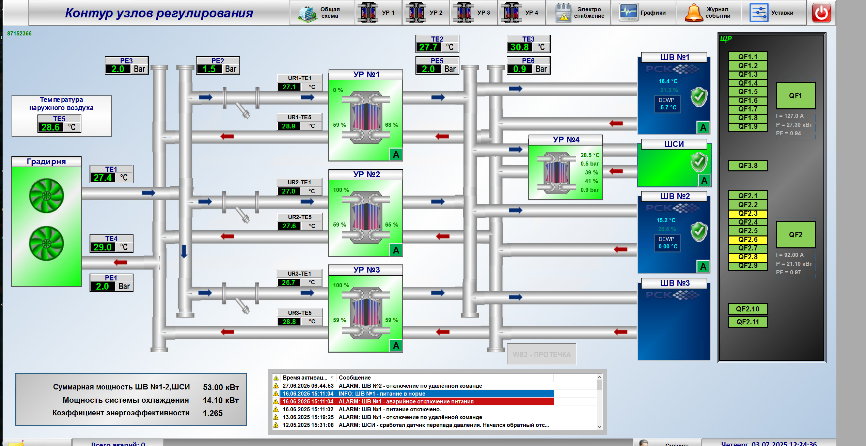

- RSC monitoring system of the cooling of the Govorun supercomputer (RSC, [1]). The cooling of the Govorun supercomputer is based on liquid cooling system. Specialized software developed by RSC is used to control the operation of supply control units of liquids (Fig. 1-2).

Figure 1. Control and Monitoring System of cooling system of the Govorun supercomputer, specialized software developed by RSC.

Figure 2. Control and Monitoring System of supply control unit #1, specialized software developed by RSC.

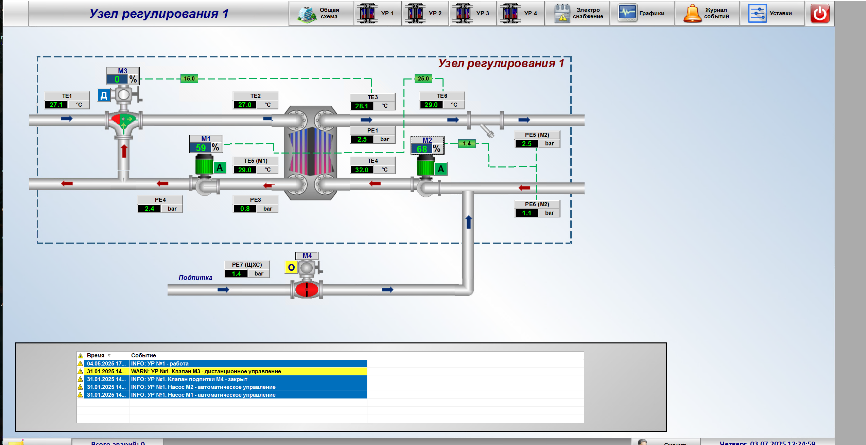

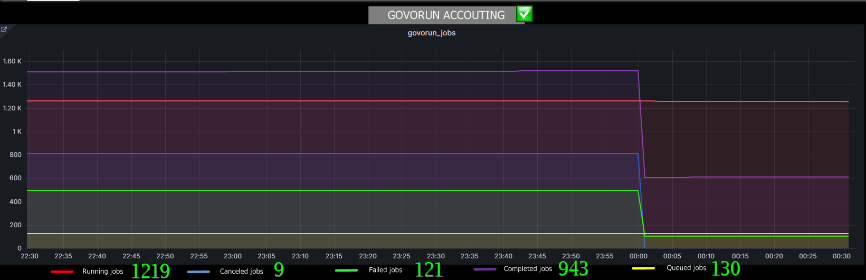

- MICC monitoring system of the Govorun supercomputer (MLIT, [2]). MICC monitoring system built on the basis of the GRAFANA system and using current data from the SLURM resource manager is used to monitor the current state of the computing queues (or partitions) of the Govorun supercomputer and the number of tasks being executed (Fig. 3-4).

Figure 3. MICC monitoring system, the GRAFANA system, MLIT.

Figure 4. Monitoring of the computing queues of the Govorun supercomputer, the GRAFANA system, MLIT.

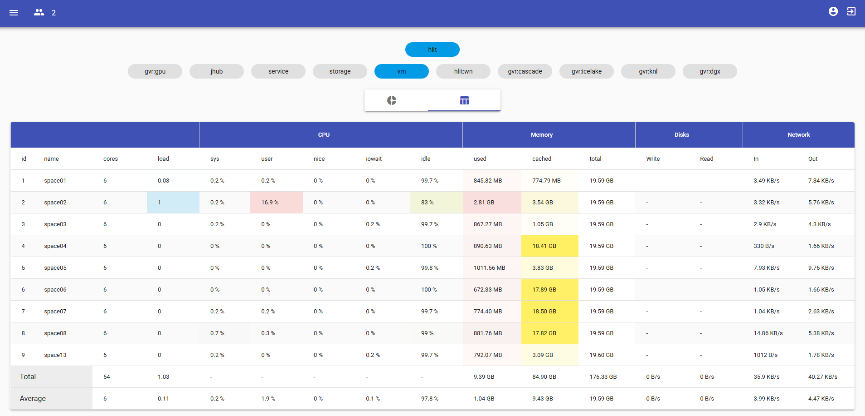

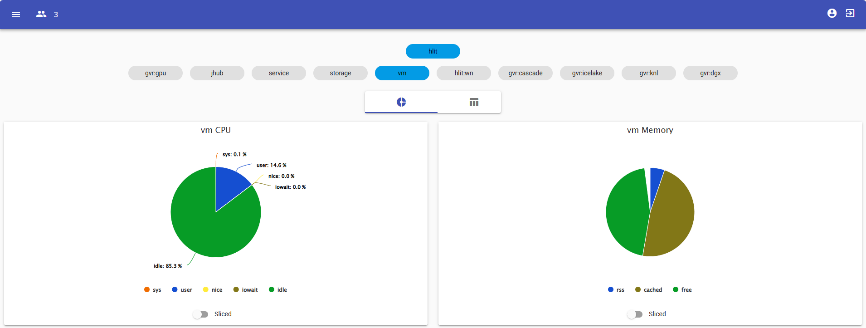

- SALSA monitoring system of computing resources (HybriLIT, [3]). The specialized SALSA monitoring system is used to monitor the operation of the hardware part of the platform’s software and information environment. This system allows tracking of various data on the summary load of computing resources (CPU/GPU, RAM, disk, network) in real-time (Fig. 5-6).

Figure 5. Summary load of computing resources of a node’s group, SALSA monitoring system, view: table, developed by HybriLIT team.

Figure 6. Summary load of computing resources of a node’s group, SALSA monitoring system, view: pie charts, developed by HybriLIT team.

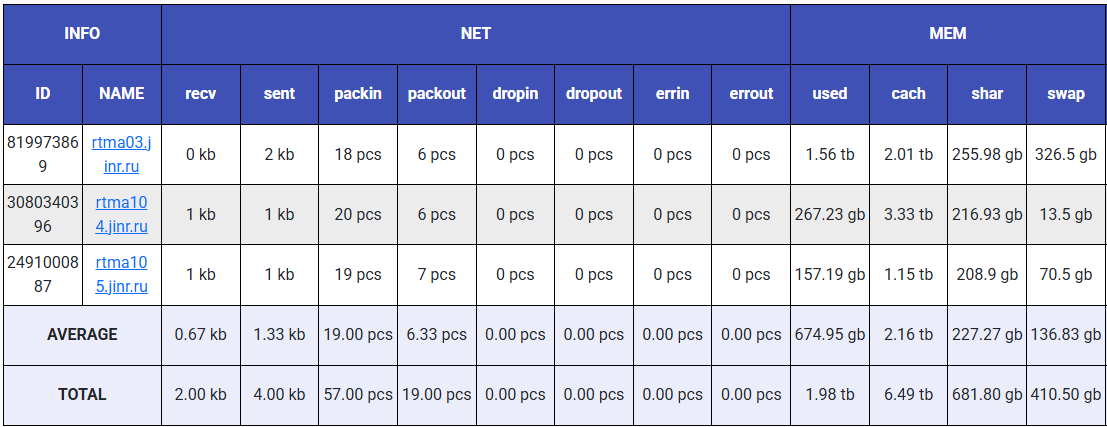

The SALSA monitoring system developed earlier on 2016-2017 years was primarily focused on tracking the summary load of the components of physical servers and virtual machines which present the platform for hosting of services. The computing power of the Govorun supercomputer was increased due to upgrades in 2024-2025 years. Now users if the HybriLIT heterogeneous platform were able to tun increasingly massive parallel tasks and arrays of such tasks. This new ability led to creation of competing load on computing resources, but also on local network and data storage system of the platform. Thereby now monitoring of the load created by resource-intensive users’ tasks is one of important tasks of system administration of the platform.

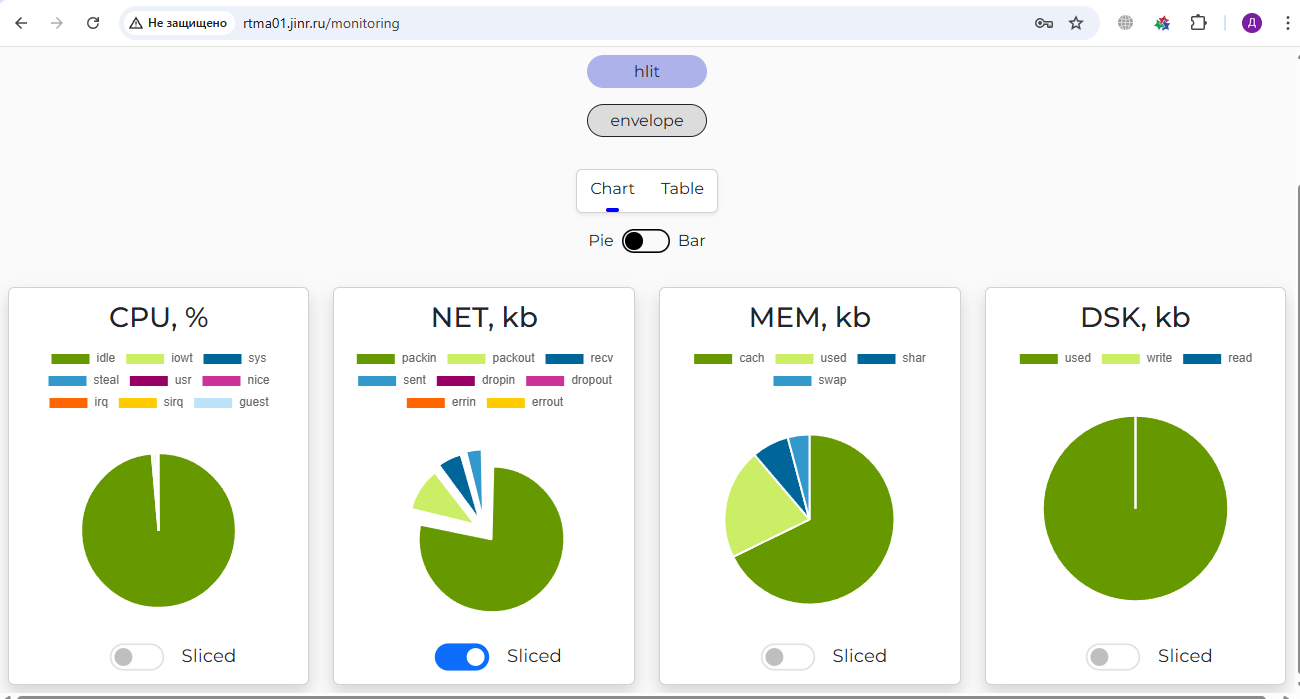

A new monitoring system named StarLIT was developed under the research theme “Development and implementation of new systems for collecting and analyzing statistics of the usage of computing resources and applications of the HybriLIT heterogeneous platform” [4]. A new system is a logical evolution of SALSA monitoring system. StarLIT monitoring system supports 5 modes for monitoring the load of components of a computing node — mode “Standard” (in summary by resources, the same mode as SALSA), mode “Extended” (in detail by individual elements of components), mode “Intraday” (an analogue to mode “Extended”, with history up to 24 hours), mode “Analytic” (an analogue to mode “Extended”, to accumulate data for an external system for processing, analyzing and visualizing statistics), mode “SLURM” (to monitor the load by SLURM computing jobs). Interface options are shown in Figures 7-12.

Figure 7. Summary load of computing resources of a node’s group, mode “Standard”, StarLIT monitoring system, view: table, developed by HybriLIT team.

Figure 8. Summary load of computing resources of a node’s group, mode “Standard”, StarLIT monitoring system, view: pie charts, developed by HybriLIT team.

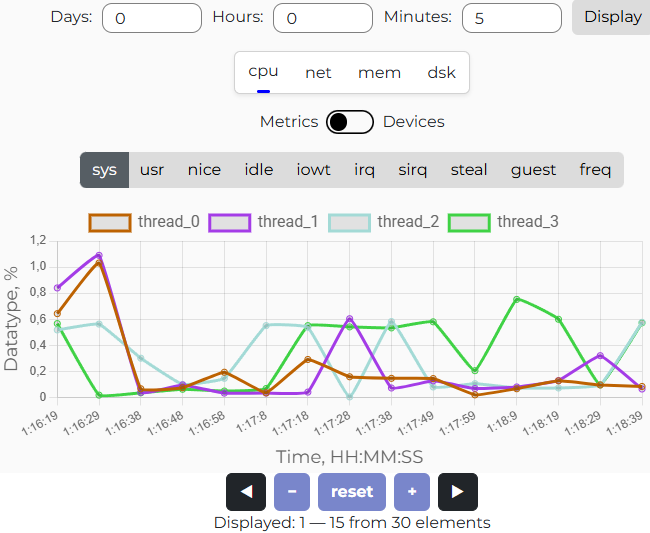

Figure 9. Monitoring of load of CPU (sys) of a computing node, mode “Intraday” StarLIT monitoring system, view: graphic, range 5 min, developed by HybriLIT team.

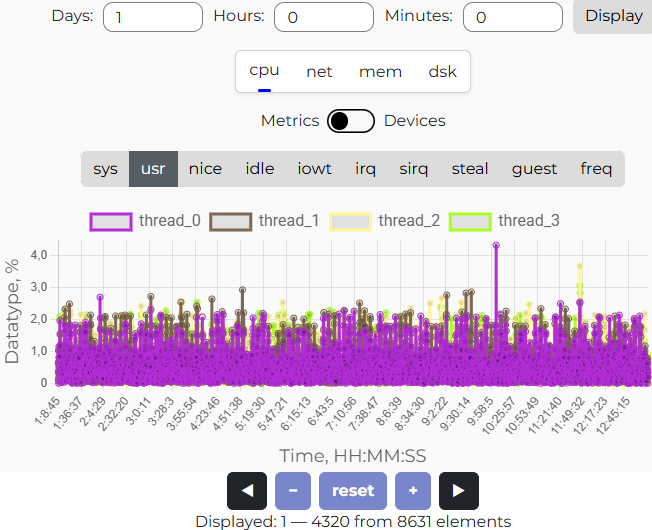

Figure 10. Monitoring of load of CPU (usr) of a computing node, mode “Intraday”, StarLIT monitoring system, view: graphics, range 1 hour, developed by HybriLIT team.

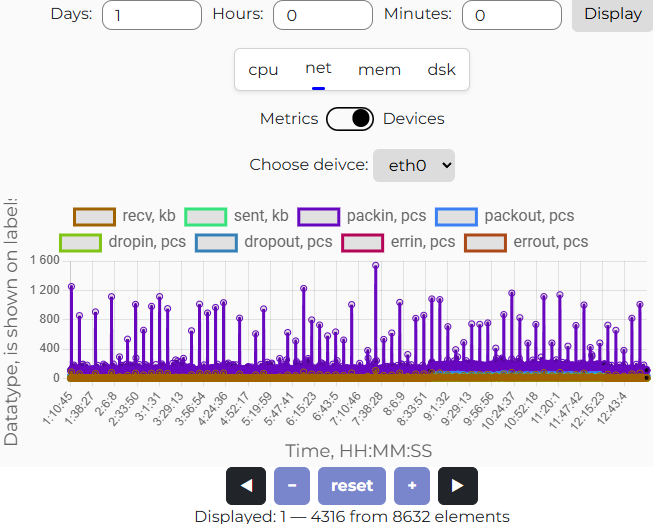

Figure 11. Monitoring of load of NET (eth0) of a computing node, mode “Intraday”, StarLIT monitoring system, view: graphics, range 24 hour, developed by HybriLIT team.

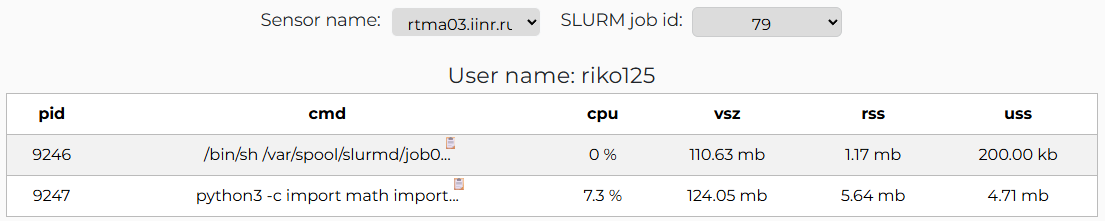

Figure 12. Monitoring of load of computing resources of a computing node by SLURM’s jobs, mode “SLURM”, StarLIT monitoring system, view: table, developed by HybriLIT team.

At current time StarLIT monitoring system is deployed on the HybriLIT heterogeneous platform in testing mode and is being prepared for putting into operation mode.

The results of the work [5] were reported at the 11th International conference “Distributed Computing and GRID Technologies in Science and Education” (GRID-2025), July 7-11, JINR, Dubna, Russia.

Литература

[1] RSC Liquid Cooling. URL: https://rscgroup.ru/technology/liquidcooling/

[2] Multi-level Monitoring System for Multifunctional and Computing Complex at JINR / Baginyan A.S., Balashov N.A., Baranov A.V., Belov S.D., Belyakov D.V., Butenko Yu.A., Dolbilov A.G., Golunov A.O., Kadochnikov I.S., Kashunin I.A., Korenkov V.V., Kutovskiy N.A., Mayorov A.V., Mitsyn V.V., Pelevanyuk I.S., Semenov R.N., Strizh T.A., Trofimov V.V., Vala M. // Proceedings, The 26th International Symposium on Nuclear Electronics & Computing (NEC-2017) — 2017 — p. 226-233.

[3] Развитие сервиса Stat-HybriLIT для мониторинга Гетерогенного кластера HybriLIT / Валя М., Майоров А.В., Бутенко Ю.А. // Материалы конференции, Информационно-телекоммуникационные технологии и математическое моделирование высокотехнологичных систем (ITTMM-2017) — 2017 — с.209-211.

[4] Поддержка и развитие МИВК ОИЯИ. URL: http://indico.jinr.ru/event/5170/contributions/31733/

[5] Развитие системы мониторинга вычислительных ресурсов Гетерогенной платформы HybriLIT / Беляков Д.В., Дереза Д.Г., Зуев М.И., Карпов Г.А., Лебедев М.П., Сказкин М.А. // Секционный доклад, 11-ая международная конференция «Распределённые вычисления и грид-технологии в науке и образовании» (GRID-2025), 8 июля 2025 г., ОИЯИ, Дубна, Россия.

URL: http://indico.jinr.ru/event/5170/contributions/31733/