About supercomputer

Within the Session of the Committee of Plenipotentiaries of the Governments of the JINR Member States, presentation of the new supercomputer named after Nikolai Nikolaevich Govorun who actively participated in the development of information technologies in JINR, will be held in March 27.

“Govorun” Supercomputer is a project developed by the Bogoliubov Laboratory of Theoretical Physics and Laboratory of Information Technologies, and it is supported by the JINR directorate.

This project is aimed at sufficient acceleration of complex theoretical and experimental researches in the field of nuclear physics and condensed matter physics held at JINR including NICA project.

Supercomputer is based on the development of the heterogeneous platform of HybriLIT, and this will lead to increase of CPU and GPU performance. Updated computing cluster will allow carry out resource intensive and parallel computations in lattice QCD for research on the properties of hadron matter at high baryon density, high temperature and in the presence of strong electromagnetic fields, positively increase the efficiency of modeling the dynamics of relativistic heavy ion collisions, development and adaptation of software for the NICA mega-project on the new computing architectures of the major HPC market leaders – Intel and NVIDIA, to create an HPC-based hardware and software environment, to prepare IT specialists in all the required directions.

Expansion of the CPU-component is carried out by means of the specialized HPC-based engineering infrastructure based on liquid cooling technologies implemented by Russian company “RSC Group”. RSC emerged as the Russia and CIS leading developer and full-cycle system integrator of new generation supercomputing solutions based on Intel architectures, innovative liquid-cooling technology and its own know-how. Expansion of the GPU-component is carried out on the basis of the latest-generation computation servers with NVIDIA Volta GPUs. Equipment procurement and pre-commissioning activities are carried out by IBS Platformix.

Technical Specifications

View technical Specifications on the RSC Group website

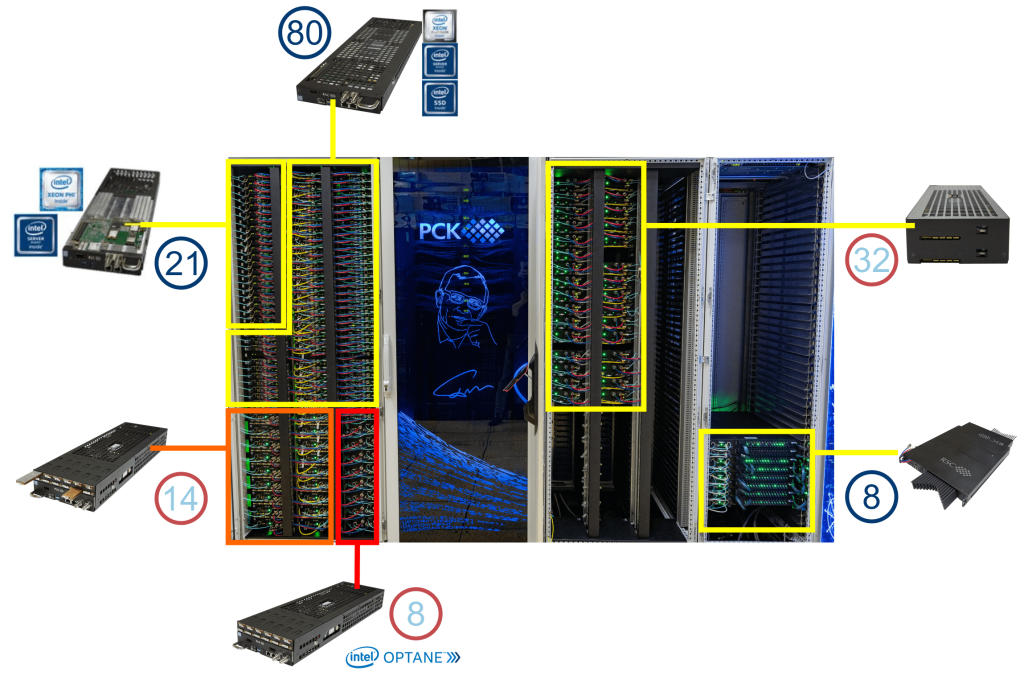

CPU component

RSC Group has developed an updated superdense, scalable and energy efficient cluster solution, which is a set of components for creating modern computing systems of various sizes with 100% liquid cooling in ‘hot water’ mode. It includes high-performance compute nodes based on Intel Xeon Phi and Intel Skylake SP (Scalable Processor) processors, combined with a High-speed interconnect Intel Omni-Path switch with similar cooling system in ‘hot water’ mode.

16 place at Top50 – 1070TFLOPS single precision peak performance

Specifications

- Intel® Xeon Phi™ 7190 processors (72 cores)

- Intel® Server Board S7200AP

- Intel® SSD DC S3520 (SATA, M.2)

96GB DDR4 2400 GHz RAM - Intel® Omni-Path 100 Gb/s adapter

- Intel® Xeon® Platinum 8268 processors (24 cores)

- Intel® Server Board S2600BP

- Intel® SSD DC S4510(SATA, M.2),

2x Intel® SSD DC P4511 (NVMe, M.2) 2TB - 192GB DDR4 2933 GHz RAM

- Intel® Omni-Path 100 Gb/s adapter

- Intel Xeon Platinum 8280 processors (28 cores)

- Intel® Server Board S2600BP

- Intel® SSD DC S4510(SATA, M.2),

2x Intel® SSD DC P4511 (NVMe, M.2) 2TB / 4x Intel® (PMem) 450 GB - 192GB DDR4 2933 GHz RAM

- Intel® Omni-Path 100 Gb/s adapter

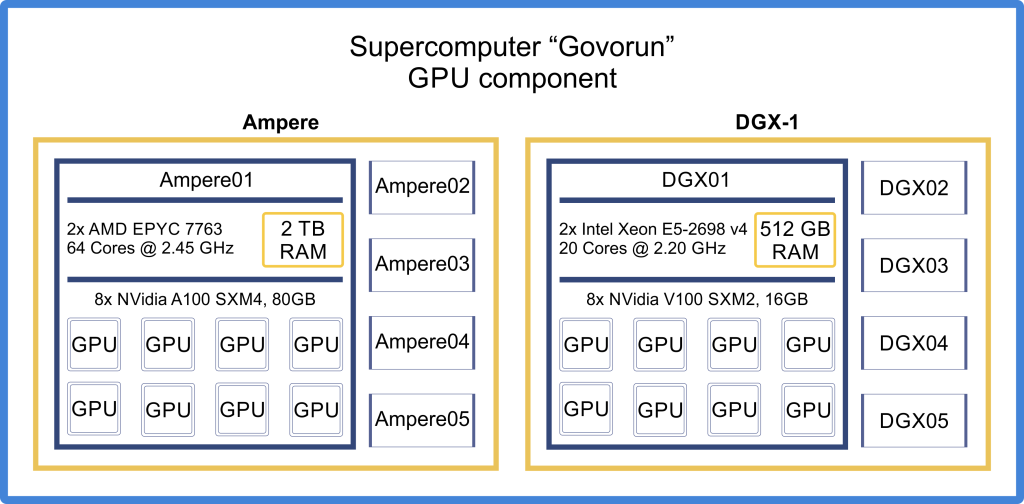

GPU component

The “Govorun” supercomputer embraces five Niagara R4206SG servers and five NVidia DGX-1 servers.

Niagara R4206SG

The Niagara R4206SG server is a system designed for use in data centers to solve tasks in artificial intelligence, data analytics and high-performance computing. Five servers with NVIDIA A100 graphics accelerators on top of the Niagara R4206SG model (Supermicro AS -4124GO-NART+) were installed and configured on the “Govorun” supercomputer. The R4206SG server supports up to 256 threads (the number of CPU cores taking into account the hyperthreading function), and the amount of RAM is 2 TB. Each server has eight NVIDIA A100 graphics accelerators with the following characteristics: 80 GB of RAM, 6,192 graphics cores (CUDA cores), 432 texture mapping units (TMUs), 160 render output units (ROPs).

Technical characteristics of the Niagara R4206SG server and the Ampere A100 graphics accelerator:

- CPU: 2x AMD EPYC 7763, 64 Cores @ 2.45 GH

- RAM: 2 TB

- NET: 2x Nvidia Mellanox 100 Gbit/sec,

2x Supermicro AIOM 100 Gbit/sec (ethernet) - GPU: 8x NVidia A100 SXM4, 80 GB

- CUDA Cores: 6912

- Tensor Cores: 432 (Gen.3)

- TMUs: 320

- ROPs: 160

- SM: 108

- L1 cache: 192 KB (per SM)

- L2 cache: 40 MB

- FP16: 77.97 TFLOPS

- FP32: 19.49 TFLOPS

- FP64: 9.746 TFLOPS

- Deep Learning: 624 TFLOPS

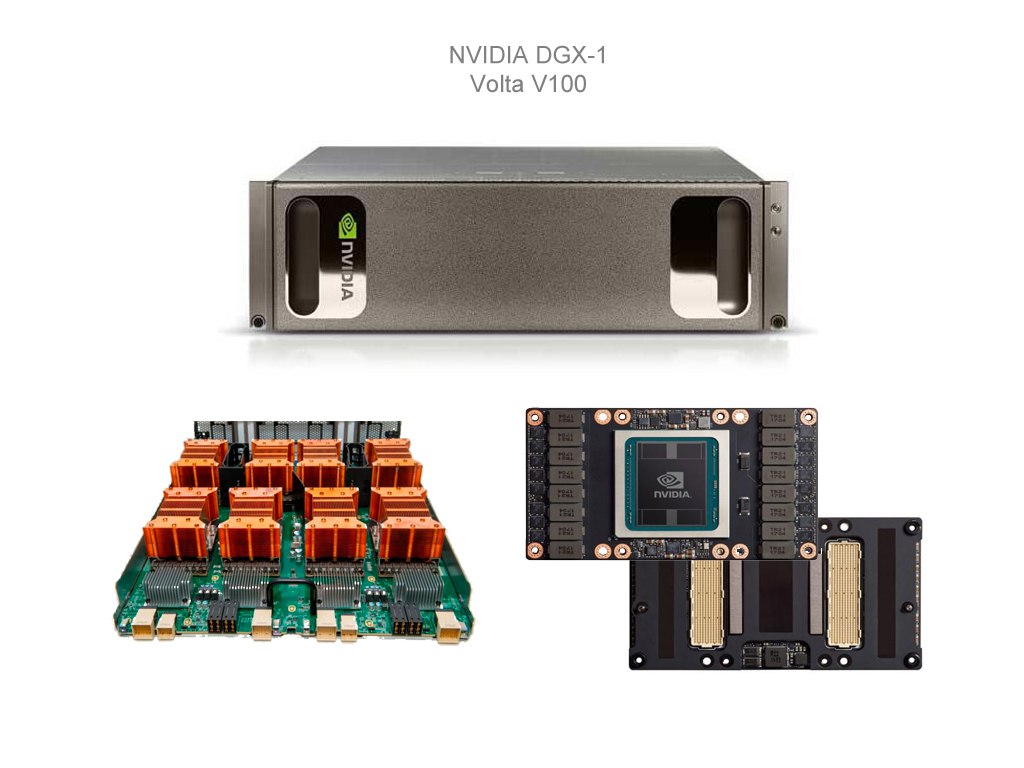

NVidia DGX-1

The NVidia DGX-1 hardware and software platform is the world’s first system designed specifically for the tasks of deep learning and accelerated data analysis in the field of artificial intelligence. This system contains eight NVidia V100 graphics accelerators on top of the Volta architecture.

The DGX-1 platform enables to process and analyze information with a performance comparable to 250 x86 architecture servers.

Technical characteristics of the DGX-1 server and the Volta V100 graphics accelerator:

- CPU: 2x Intel Xeon E5-2698 v4, 20 Cores @ 2.20 GHz

- RAM: 512 GB

- NET: 2x Mellanox InfiniBand 100 Gbit/sec,

1x Mellanox InfiniBand 100 Gbit/sec (ethernet),

1x Intel Omni-Path 100 Gbit/sec - GPU: 8x NVidia V100 SXM2, 16 GB

- CUDA Cores: 5120

- Tensor Cores: 640 (Gen.1)

- TMUs: 320

- ROPs: 128

- SM: 80

- L1 cache: 128 KB (per SM)

- L2 cache: 6 MB

- FP16: 31.33 TFLOPS

- FP32: 15.67 TFLOPS

- FP64: 7.834 TFLOPS

- Deep Learning: 125.92 TFLOPS

View the Nvidia Volta V100 graphics accelerator technical description

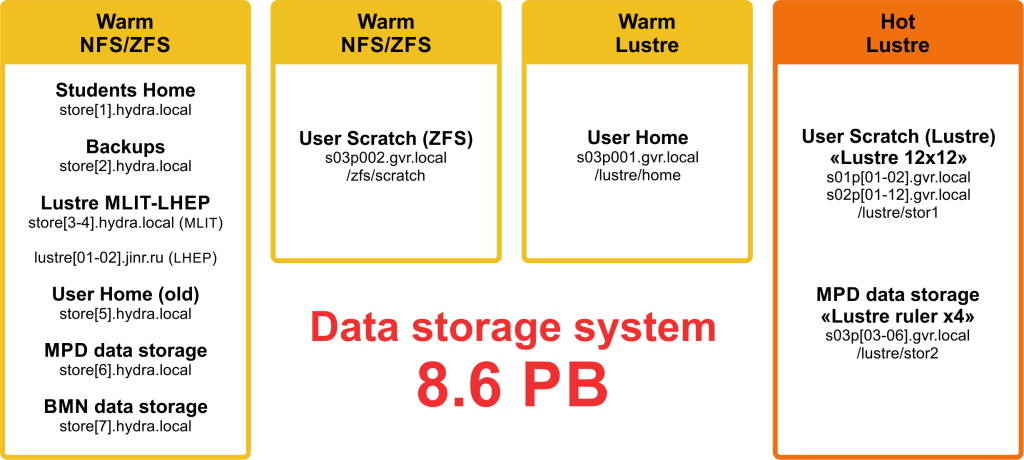

Data storage systems

Working with databases on the “Govorun” supercomputer

The “Govorun” supercomputer contains the network storage system, RSC Storage on-Demand, which is a unified centrally managed system and has several data storage layers, namely, very hot data, hot data and warm data.

- The very hot data storage system is created on top of four RSC Tornado TDN511S blade servers. Each server features 12 high-speed, low-latency Intel® Optane™ SSDs DC P4801X 375GB M.2 Series with Intel® Memory Drive Technology (IMDT), delivering 4.2 TB for very hot data per server.

- The hot and warm data storage system comprises a static storage system with the Luster parallel file system, created on top of 14 RSC Tornado TDN511S blade servers, and a dynamic RSC Storage on-Demand on 84 RSC Tornado TDN511 blade servers with support for the Luster parallel file system.

Low-latency Intel® Optane™ SSDs DC P4801X 375GB M.2 Series are used for fast, lag-free access to Luster file system metadata. Intel® SSDs DC P4511 (NVMe, M.2) are used for Luster hot data storage.

The network infrastructure module embraces a communication and transport network, a control and monitoring network, and a task management network. NVIDIA DGX-1 servers are interconnected by the communication and transport network based on InfiniBand 100 Gbps technology, and this component communicates with the CPU module via Intel OmniPath 100 Gbps. 3.5. The communication and transport network of the CPU module employs Intel OmniPath 100 Gbps technology and is built using a “thick tree” topology based on 48-port Intel OmniPath Edge 100 Series switches with full liquid cooling.

An equally essential part of the “Govorun” supercomputer architecture is the RSC BasIS supercomputer control software. RSC BasIS uses the CentOS Linux version 7.8 operating system on all compute nodes and performs the following functions:

- monitors compute nodes with the emergency shutdown functionality in the case of detecting critical malfunctions (such as compute node overheating);

- collects performance indicators of communication and transport network components;

- collects performance indicators of compute nodes, namely, processor and RAM load;

- stores monitored indicators with the ability to view statistics for a given time interval (at least one year);

- collects readings from the integral indicator of the state of compute nodes and displays them on the geometric view of the calculator rack;

- displays the status of the leak detection system using humidity control sensors on compute nodes and displays it on the geometric view of the calculator rack;

- displays the efficiency of using allocated resources via the SLURM scheduler to the cluster user for a specific task in the form of an indicator of the average load of CPUs allocated by the user (%);

- displays the availability of compute nodes via the computing network and the control network on the geometric view of the calculator rack.