The supercomputer was built on the basis of new designs and technologies. It is considere to be the development of the HybriLIT heterogeneous platform, and leads to a significant increase in the performance of both the CPU and GPU components of the platform (1 Petaflops with single precision and 500 Teraflops with double precision). The upgraded computing cluster allows for resource-intensive, massively parallel calculations in lattice QCD to study the properties of hadronic matter at high baryon density, high temperature and in the presence of strong electromagnetic fields, and qualitatively increases the efficiency of modeling the dynamics of collisions of relativistic heavy ions. The supercomputer consists of two components: one is based on Intel central processors and the second – on Nvidia graphics processors. This allows to develop and adapt software for the NICA mega-project to new computing architectures from key players in the HPC market – Intel and NVIDIA; also to create a software and hardware environment based on HPC, and prepare IT specialists in all necessary fields.

The project is aimed at radically accelerating complex theoretical and experimental research carried out at JINR, including the NICA complex. The opening presentation of the Govorun supercomputer took place on March 27, 2018.

CPU-component based on Intel processors

This part of the project of the new JINR supercomputer was implemented with the participation of experts from the RSС group and Intel Corporation. RSС has developed an updated ultra-dense, scalable and energy-efficient cluster solution called “RSC Tornado”, which is a set of components for developing modern computing systems of various sizes with 100% liquid cooling in “hot water” mode.

Picture 1. Computing module

The supercomputer is based on high-density and energy-efficient RSC Tornado solution based on Intel server technologies with direct liquid cooling. The computing nodes are based on Intel server products: the most powerful 72-core Intel® Xeon Phi™ 7290 server processors, Intel® Xeon® Scalable processors (Intel® Xeon® Gold 6154 models), Intel® Server Board S7200AP and Intel® Server boards Board S2600BP, Intel® SSD DC S3520 family with SATA M.2 connectivity, and the latest high-speed 1TB Intel® SSD DC P4511 NVMe SSDs.

Picture 2. Intel® Omni-Path Edge Switch 100 Series switch

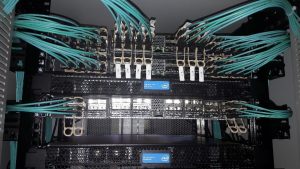

For high-speed data transfer between computing nodes, the JINR supercomputer complex now uses advanced Intel® Omni-Path switching technology, providing non-blocking switching speeds of up to 100 Gbit/s, based on 48-port Intel® Omni-Path Edge Switch 100 Series with 100 % liquid cooling, which ensures high efficiency of the cooling system in hot water mode and the lowest total cost of ownership of the system. The use of Intel® Omni-Path Architecture will not only satisfy the current needs of resource-intensive user applications, but also provide the necessary network bandwidth for the future.

Cooling system

The Joint Institute for Nuclear Research has installed new universal “RSC Tornado” computing cabinets with record energy density and a precision liquid cooling system, balanced for constant operation with high-temperature coolant (up to +63 °C at the entrance to the computing cabinet). In accordance with the conditions for placing the equipment for JINR, the optimal operating mode of the computing cabinet was selected at a constant coolant temperature of +45 °C at the entrance to the computing nodes (with a peak value of up to +57 °C).

Picture 3. Dry Cooler Installation

Working in “hot water” mode for this solution made it possible to apply a year-round free cooling mode (24x7x365), using only dry cooling towers that cool the liquid using ambient air on any day of the year, and also completely get rid of the freon circuit and chillers. As a result, the system’s annual average PUE, a measure of its level of energy efficiency, is less than 1.06. Thus, less than 6% of all electricity consumed is spent on cooling, which is an outstanding result for the HPC industry.

RSC direct liquid cooling technology ensures precise heat removal from the server using a cooling plate that completely covers the entire element-containing surface of the computing node and is cooled by liquid. This approach ensures the most complete heat removal from the entire area of the server components, excluding local overheating and air pockets, which increases the service life of electronic components and also increases the fault tolerance of the entire solution.

To implement such a cooling system, a dry cooling tower was installed. The task of a dry cooling tower is to provide cooling of the coolant involved in the technological process. This is achieved due to the fact that the liquid supplied to the heat exchange device reduces its temperature under the influence of the air flow taken by the ventilation unit from the environment.

Due to the peculiarities of the climate, it was decided to use a solution of non-freezing liquid as a coolant.

From the cooling tower, ethylene glycol enters the collector and then into a heat exchange unit, which absorbs thermal energy from water that directly circulates through the supercomputer’s computing nodes.

The supercomputer receives water cooled to a temperature of 45 degrees. Having passed through the entire circuit in the supercomputer, water heated to 50 degrees returns to the heat exchanger, where it is cooled, transferring thermal energy to the hydraulic circuit of the dry cooling tower.

The cooling system has a smooth performance adjustment, which allows to increase or decrease the power of the cooling system in accordance with the actual load. This makes it possible to significantly reduce energy consumption at partial load.

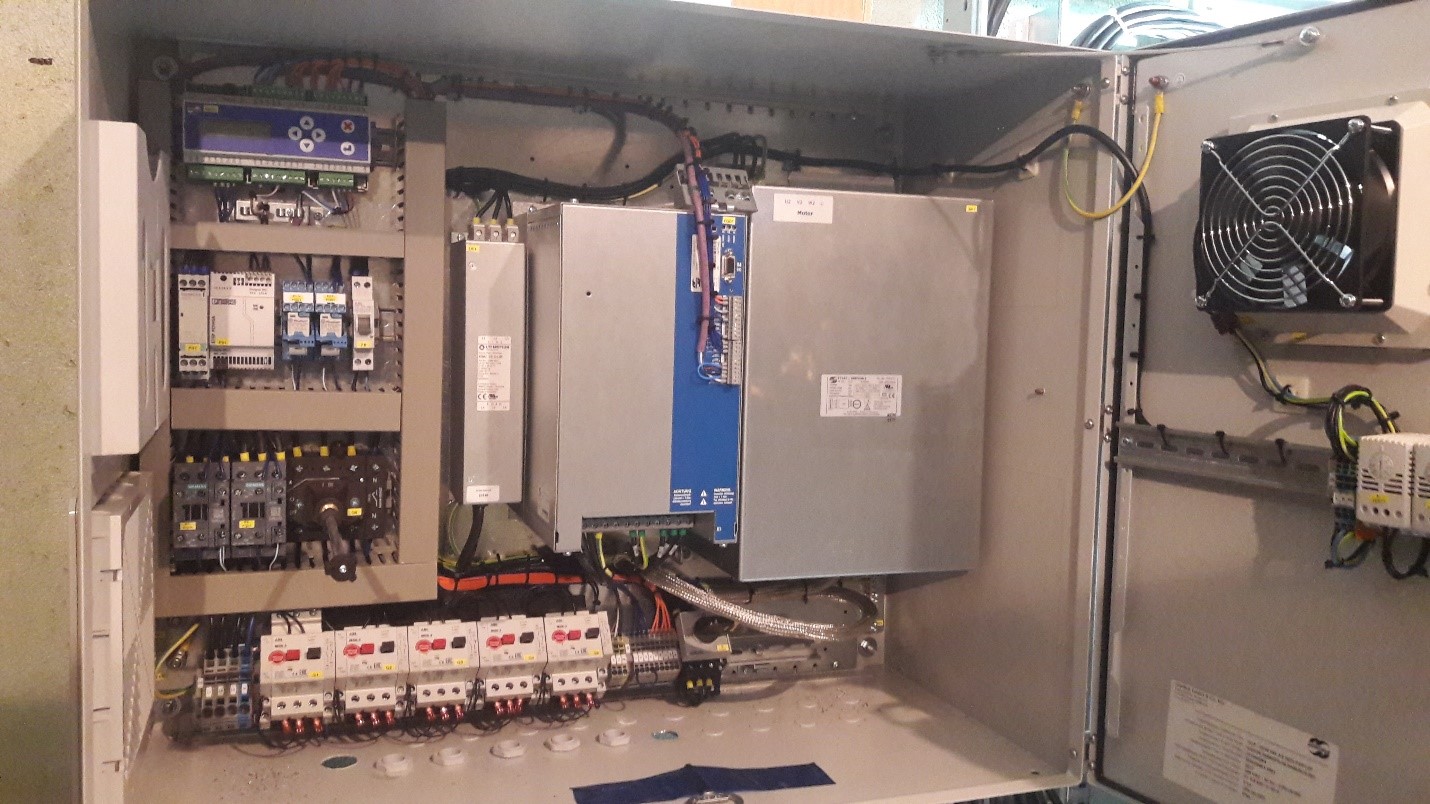

Power supply system

To power the distribution board, a 400 A input circuit breaker is used. The entire RSC Tornado rack consumes up to 100 kW under full load.

The cooling tower switchboard uses electronics to measure coolant temperature and control fan speed.

«RSK BasIS» software stack for monitoring and management

High availability, fault tolerance and ease of use of computing systems created on the basis of RSC solutions for high-performance computing are also ensured due to an advanced management and monitoring system based on RSC BasIS software. It allows to manage both individual nodes and the entire solution, including infrastructure components. All elements of the complex (computing nodes, power supplies, hydroregulation modules, etc.) have a built-in control module, which provides wide opportunities for detailed telemetry and flexible control. The design of the cabinet allows to replace computing nodes, power supplies and elements of the hydraulic system (subject to redundancy) in hot-swappable mode without interrupting the functionality of the complex. Most system components (such as compute nodes, power supplies, networking and infrastructure components, etc.) are software-defined components that significantly simplify and speed up both initial deployment and maintenance, and subsequent system upgrades. Liquid cooling of all components ensures long service life.

GPU-component based on NVIDIA processors

The GPU component of the supercomputer includes 5 NVIDIA DGX-1 servers. Each server has 8 NVIDIA Tesla V100 GPUs based on the latest NVIDIA Volta architecture. In addition, a single NVIDIA DGX-1 server consists of 40,960 CUDA cores, which is equivalent in processing power to 800 high-performance CPUs.

Tesla V100 processors in the DGX-1 are five times faster than Pascal-based products. It uses a whole bunch of new technologies, including the NVLink 2.0 with a throughput of up to 300 Gbit/s. Each GPU has 5,120 cores and more than 21 billion transistors, and HBM2 memory bandwidth is 900 GB/s.

Along with the graphics processors, the system includes two 20-core Intel Xeon E5-2698 v4 processors operating at 2.2 GHz. The server is equipped with four 1.92 TB solid-state drives and one 400 GB system SSD.

The solution from NVIDIA is based on air cooling, so the rack with DGX-1 servers is geographically located at the other end of the computer room relative to the RSC racks, which are water cooled. Cooling of the components inside the server is provided by 4 massive twin fans with a rotation speed of up to 8000 RPM.

The energy consumption of one server under load can reach 3200 Watts, i.e. an entire rack of 5 servers requires about 16 kW. To provide the necessary power supply, two three-phase APC AP8886 power distribution units with the ability to connect up to 32 A per phase are installed in the server cabinet. The PDUs themselves, in turn, are powered by a Riello Master HP 160 kVA UPS.

The server itself includes 4 1600 W power supplies with N+1 redundancy. Uniform distribution of the load on the power supplies is ensured by a special controller.

Communication with the rest of the cluster nodes is carried out using Infiniband high-speed switched computer network which is normally used in high-performance computing as long as it has very high throughput and low latency.

Picture 13. “Govorun” supercomputer

Picture 13. “Govorun” supercomputer