Description of the ML/DL/HPC ecosystem

The active implementation of the neural network approach, methods and algorithms of machine learning and deep learning (ML/DL) for solving a wide range of problems is defined by many factors. The development of computing architectures, especially while using DL methods for training convolutional neural networks, the development of libraries, in which various algorithms are implemented, and frameworks, which allow building different models of neural networks can be referred to the main factors. To provide all the possibilities both for developing mathematical models and algorithms and carrying out resource-intensive computations including graphics accelerators, which significantly reduce the calculation time, an ecosystem for tasks of ML/DL and data analysis has been created and is actively developing for HybriLIT platform users.

Video “Introduction into ML/DL/HPC Ecosystem”

Useful links:

Oksana Streltsova, Deputy Head of the Group, LIT JINR (in Russian)

Video by A.S. Vorontsov

Ecosystem for ML/DL/HPC tasks and data analysis

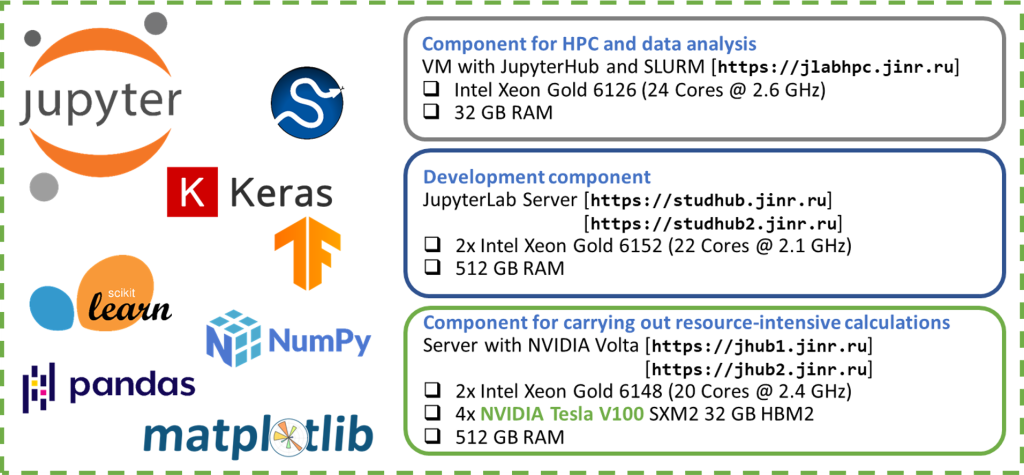

The ecosystem has the following components (Fig.1.):

- HPClab component is designed for carrying out calculations on the computing nodes of the HybriLIT platform, application development and scientific visualization – https://jlabhpc.jinr.ru;

- Educational component is designed for developing models and algorithms based on JupyterHub which is a multi-user platform for working with Jupyter Notebook (known as IPython with the possability to work in a web browser) – https://studhub.jinr.ru, https://studhub2.jinr.ru;

- Computation component is designed for carrying out resource-intensive, massively parallel calculations; for example, for training neural networks using NVIDIA Volta graphics accelerators – https://jhub1.jinr.ru, https://jhub2.jinr.ru.

The parameters of the virtual machine (VM) for the first component and servers for the second and third components are presented in Figure 1.

The third component contains 4 NVIDIA Tesla V100 32 GB graphics accelerators in jhub1 and jhub2 servers.

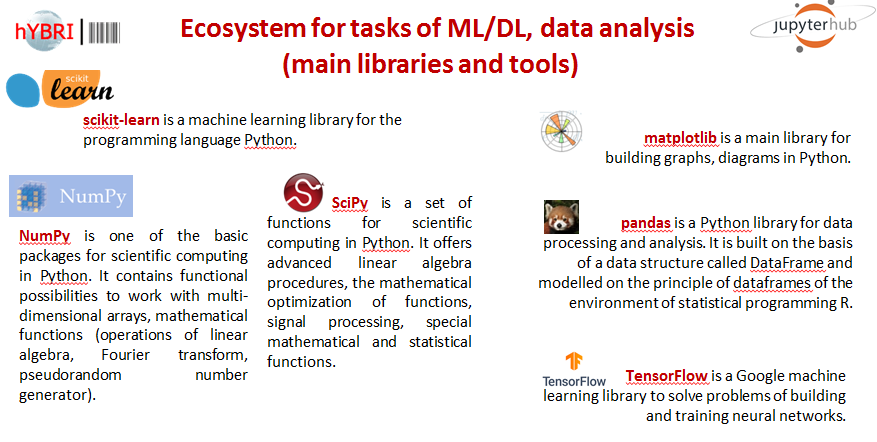

Figure 2 shows the most commonly used libraries and frameworks installed on components of the ecosystem for solving ML/DL problems and data analysis tasks.

Work within the ML/DL/HPC ecosystem

To get started you need to:

- Log in with your HybriLIT account in GitLab:

https://gitlab-hybrilit.jinr.ru/

- Enter the components (the autorization is done via GitLab):

| Development component (without graphics accelerators) |

Component for carrying out resource-intensive calculations (with graphics accelerators NVIDIA) |

Component for HPC on the HybriLIT platform nodes and data analysis

(JupyterHub and SLURM) |

| https://jlabhpc.jinr.ru/ |

Jupyter Notebook

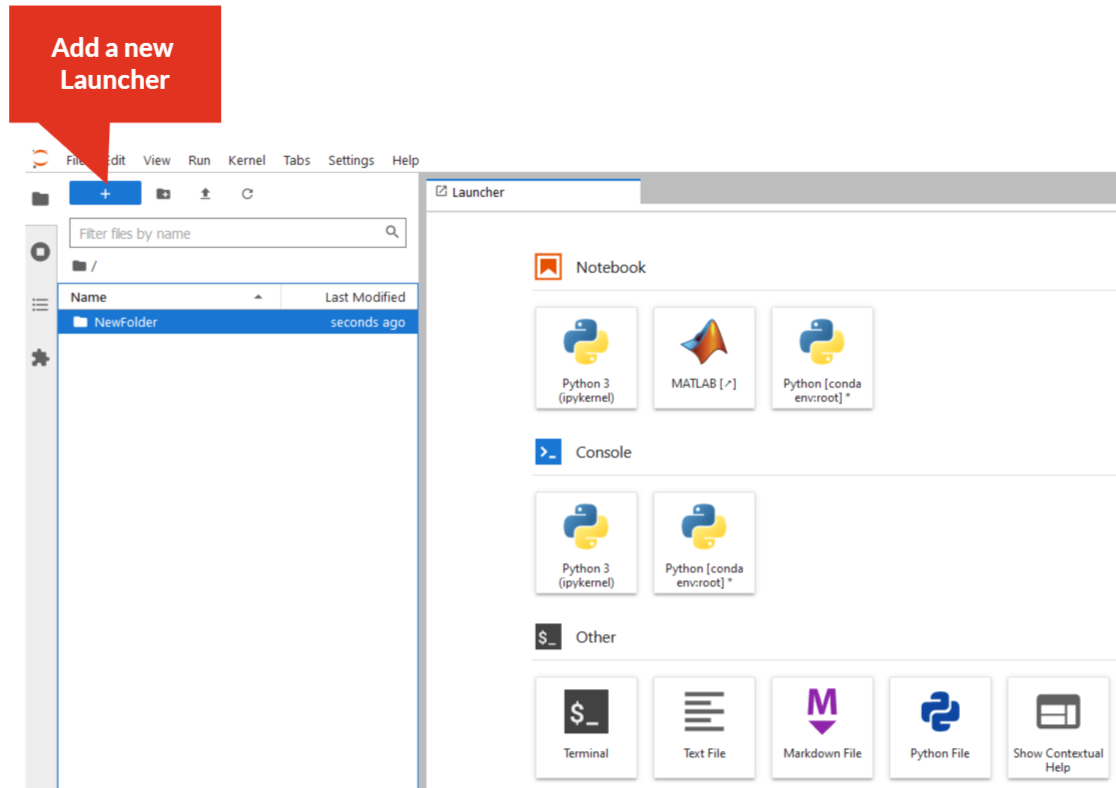

Once authorized, Jupyter Notebook interactive environment will open:

Users have access to their home directories located on NFS/ZFS or Luster file system.

Getting started with Jupyter Notebook

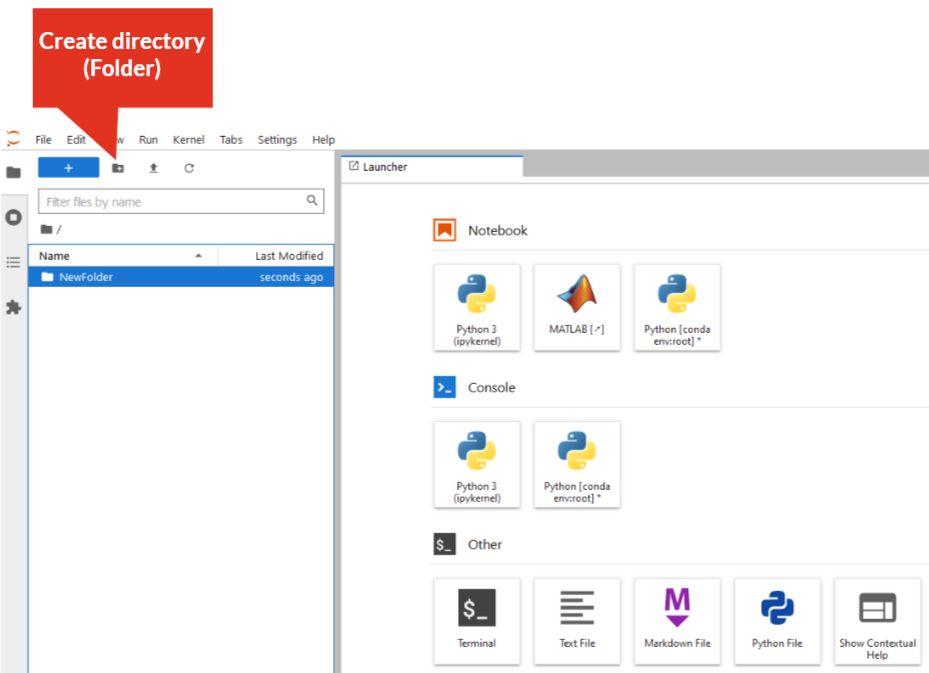

Create directory:

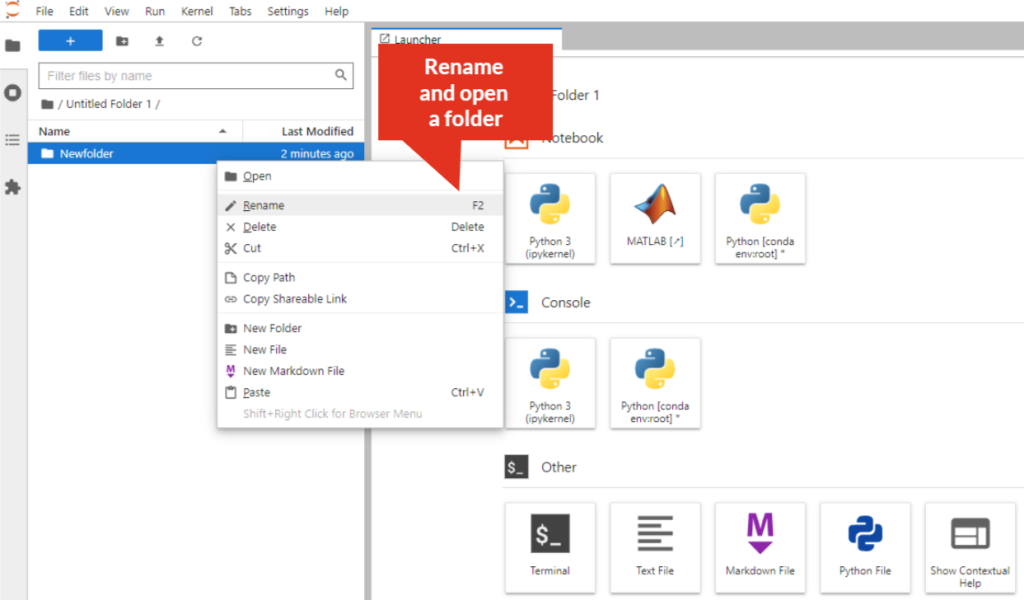

Rename directory:

– right-click on the directory/folder, select “Rename” from the drop-down menu, or select the directory/folder and press “F2”. The directory/folder name must not contain spaces!

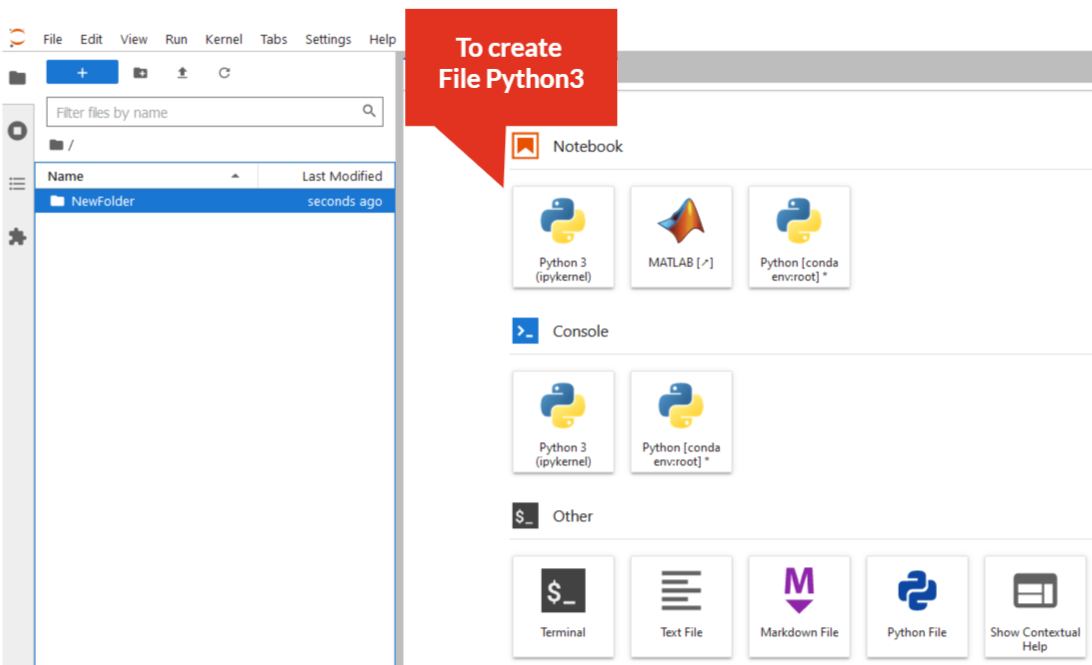

Create Python3 file:

– click on “Python3” icon

Add a new tab (New Launcher):

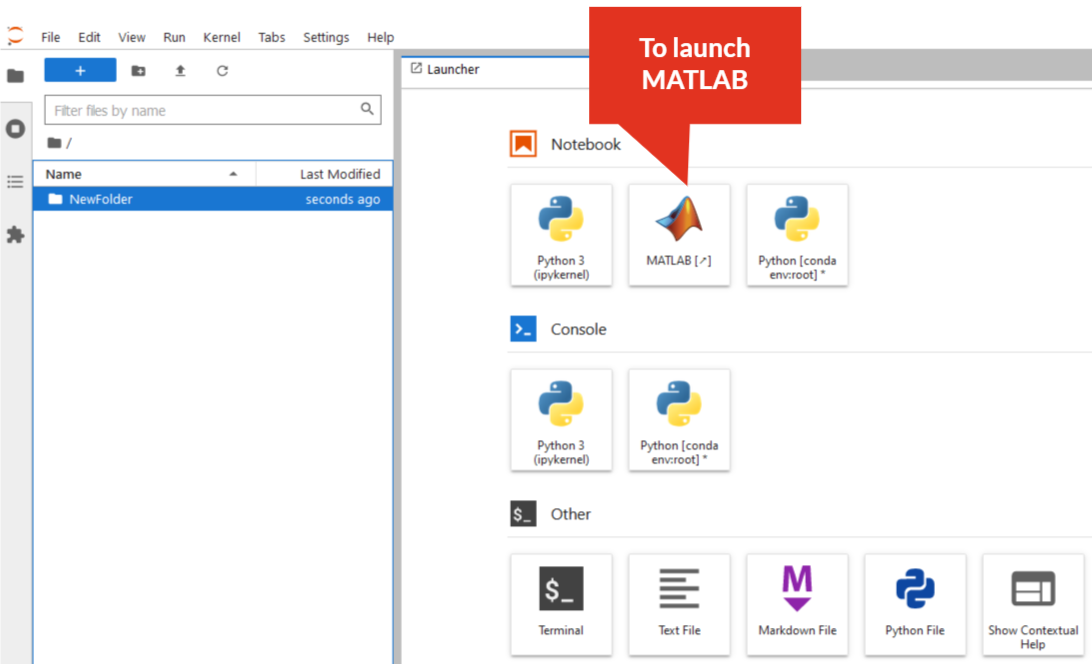

MATLAB in JupyterHub environment:

— click on “MATLAB” icon, then follow the instructions